Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

With the advent of the era of digitization and networking, especially the development of broadband wireless networks, it provides an opportunity for the application of large data transmission services such as audio and video on wireless networks. At the same time, due to the unique sensory characteristics of audio and video, the related application requirements have become more and more urgent. Wireless multimedia is a product of the fusion of technologies in the two fields of multimedia and mobile communication, and has become a hot spot in the field of communication today. In view of the open source nature of the Linux kernel, it is used as an operating system, so that the entire system has better real-time and stability. The whole system uses ARM11 as the core processor, encodes and codes with the new generation video codec standard H.264, and transmits audio and video through the wireless network. It makes full use of the integrated codec (MulTI-Formatvideo Codec, MFC) integrated in the S3C6410 microprocessor, which effectively improves the cost performance of the system. The whole system provides a good solution for the transmission of wireless multimedia audio and video. It can be widely used in various fields such as remote monitoring, video telephony, etc. It has good practical value and popularized application prospects.

1. System overall design

The audio and video acquisition module in the communication system is responsible for collecting the analog signal, and sending the collected audio and video data to the audio and video management module, after being compressed, and then sending the data packet header to the other party by WiFi; after receiving the data, the other party receives the data. After relevant processing, the audio and video frame type is determined, and then sent to the decompression processing module to recover the audio and video data. Both communication devices include an embedded audio and video management module and a wireless transceiver module. The wireless WiFi transceiver module operates in the 2.4 GHz band and is compliant with the IEEE 802.11b wireless LAN protocol standard.

2. System hardware design

The system hardware design uses ARM11 as the core microprocessor, the main frequency is 532 MHz, which can meet the requirements of real-time processing. It integrates 256 MB SDRAM, 2 GB FLASH, audio recording, playback interface, Camera video interface and wireless WiFi interface. , LCD interface, SD card interface, etc., with open source Linux 2.6.28 as the kernel, yaffs2 as the root file system, Qtopia 4.4.3 as the user interface, providing a good platform for development and debugging and system design.

2.1 Audio and video acquisition module

The audio uses the integrated IIS (Inter-IC SoundBus) audio interface and the WM9714 audio chip. IIS is a bus standard customized by Philips for audio data transmission between digital audio devices. In the IIS standard of Philips, both the hardware interface system and the format of the audio data are specified. Based on this hardware and interface specification, integrated audio output, Linein input and Mic input function are realized.

The video capture uses the OV9650 CMOS camera module with a resolution of up to 1.3 million pixels, which can be directly connected to the Camera interface of the OK6410 development board. Suitable for high-end consumer electronics, industrial control, car navigation, multimedia terminals, industry PDA, embedded education and training, personal learning. Its structure is relatively simple, providing hardware drivers for ease of use and debugging.

2.2 Wireless transmission module

The wireless transmission module of the system is implemented by a WiFi module operating in the 2.4 GHz public frequency band. It complies with the IEEE 802.11b/g network standard and can be used to connect the terminal to the Internet in later development. The maximum data rate is 54 Mb/s. , support WinCE, Linux system. The indoor communication distance can reach 100 m, and the outdoor open space can reach 300 m. Only a simple configuration of the ARM-Linux operating system can be converted from the Ethernet connection mode to the dual-machine communication AD-HOC mode. After the system is started, a Qt-based window design is designed to facilitate the switching of the connection mode.

The choice of WiFi has good scalability and can be connected to the WAN through the WiFi of the wireless router, which has a good application prospect. At the same time, most mobile devices and other terminal devices have WiFi function, and the software can be upgraded to the Andriod system in the later stage, which is convenient for development and porting. It reduces the development cost and cycle of real-time audio and video transmission, and also provides a new audio and video communication method for modern mobile communication.

After the WiFi driver is configured, the application layer and Ethernet interface mode are programmed exactly the same. Due to the large amount of audio and video data in this design, UDP should not be used. When the amount of data is too large or the transmission signal is not good, UDP will seriously lose packets. Therefore, the connection-oriented TCP transmission protocol is selected to ensure the effective transmission of system audio and video. . Since TCP transmits data in response time, there is no need to consider the TCP packet loss problem in the LAN, which provides a reliable guarantee for system functions.

3. Software design

The software is divided into user interface design and data processing, transmission and other modules.

3.1 Multi-thread based software overall design

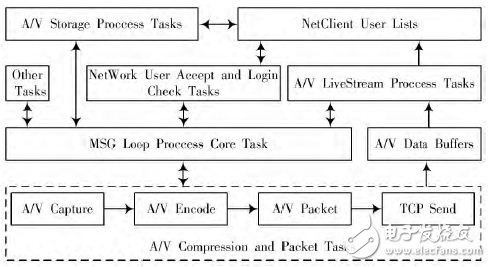

The system software architecture is shown in Figure 1. It is a one-way acquisition, compression, transmission, reception, decompression, and processing of playback audio and video streams. Each module uses thread processing, and the semaphore processing thread priorities constitute a loop. The thread effectively handles the audio and video data streams. The functions of the system are modular, easy to modify and transplant, and the code is short and precise.

Figure 1 Software Architecture

3.2 echo cancellation

The echo and delay problems occur at the beginning of the system. The delay is caused by the acquisition and transmission process, so the delay can only be shortened as much as possible, and it is impossible to play it instantly. This is one of the defects of this system. The echo is caused by the delay, and the final use of the open source Speex algorithm eliminates the echo. Specific practice: Compile the algorithm into a library file and add it to the Linux kernel, that is, you can use Speex's API function to achieve audio echo cancellation.

3.3 Synchronization of embedded audio and video

The basic idea of this paper is that the video stream is the main media stream, the audio stream is the slave media stream, the video playback rate remains unchanged, the actual time is determined according to the local system clock, and the audio and video synchronization is achieved by adjusting the audio playback speed.

First select a local system clock reference (LSCR), then send the LSCR to the video decoder and audio decoder, the two decoders according to the PTS value of each frame against the local system clock, reference to generate accurate display or playback of each frame time. That is to say, when generating the output data stream, each data block is time stamped according to the time on the local reference clock (generally including the start time and the end time). During playback, the timestamp on the data block is read and the playback is scheduled according to the time on the local system clock reference.

The audio and video synchronization data flow of the whole system is shown in Figure 2.

4. Audio and video channel management

In order to save memory resources and facilitate channel management, this design uses sub-channel thread pool management, and audio and video are respectively completed by their own channels.

Audio and video capture uses the same thread processing, using the select system call, each execution of this thread, to determine whether the audio and video equipment is ready, if ready, collect audio or video to the audio and video buffer, and then to the audio and video capture compression thread, Finally, it is delivered to the sending thread and then sent by TCP. It should be noted that the design threads use semaphores to complete the synchronization management of the TCP-based audio and video software architecture between threads. After sending, it enters the receiving thread and waits for the other party to pronounce the video data. After receiving data from the receiving thread at the receiving end, the header of the data is judged, and then processed by the decompressing processing thread, and then the audio and video are played, and then the other party sends data to the local machine.

Due to the high-speed processing of the processor and the high-efficiency video hardware H.264 decompression, the real-time performance of the entire system is basically met. The embedded audio and video management module realizes the overall system control and real-time processing, providing a reliable guarantee for audio and video data management.

5 Conclusion

At present, video surveillance products based on embedded wireless terminals are favored because of the advantages of no wiring, long transmission distance, strong environmental adaptability, stable performance and convenient communication. They are used in security surveillance, patrol communication, construction liaison, personnel deployment and other occasions. An irreplaceable role. The system is a wireless audio and video communication handheld terminal based on embedded Linux. It is small in size and convenient to carry. The lithium battery is powered by the switching power supply chip to reduce the efficiency of the whole system. It can be used in outdoor visual entertainment, construction site monitoring, large security contact, etc., and has broad application prospects.

May 17, 2024

May 11, 2024

In the realm of healthcare, where every second counts and patient well-being is paramount, nurse call systems play a pivotal role in ensuring efficient communication and timely response to patient...

Why we need Video Door Phone For Home? As we know, video door phone that allows you to see, hear, and speak to the visitor at your door whether you`re at home, at work.

[ Pacific Security Network News ] The following small series should introduce the operation method of video doorbell installation. Everyone knows that in China, video doorbell is a convenient and...

[ Pacific Security Network News ] The following small series should introduce the operation method of video doorbell installation. Everyone knows that in China, video doorbell is a convenient and...

Email to this supplier

May 17, 2024

May 11, 2024

April 11, 2024

December 07, 2023

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

Fill in more information so that we can get in touch with you faster

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.